Wireless Mixed Reality with LPVR-AIR 3.3 and Meta Quest

Achieving Accurate Mixed Reality Overlays

In a previous blog post we’ve shown the difficulties of precisely aligning virtual and real content using the Varjo XR-3 mixed reality headset. In spite of the Varjo XR-3 being a high quality headset and accurate tracking using LPVR-CAD we had difficulties reaching correct alignment for different angles and distances from an object. We concluded that the relatively wide distance between video passthrough cameras and the displays of the HMD causes distortions that are hard to be corrected by the Varjo HMD’s software.

Consumer virtual reality headsets like the Meta Quest 3 have only recently become equipped with video passthrough cameras and displays that operate at similar image quality as the Varjo headsets. We have therefore started to extend our LPVR-AIR wireless VR software with mixed reality capabilities. This allows us to create similar augmented reality scenarios with the Quest 3 as with the Varjo XR series HMDs.

Full MR Solution with LPVR-AIR and Meta Quest

The Quest 3 is using pancake optics that allow for a much closer distance between passthrough cameras and displays. Therefore the correction of the camera images the HMD has to apply to align virtual and real content accurately is reduced. We show this in the video above. We’re tracking the HMD using our LPVR-AIR sensor fusion and an ART Smarttrack 3 outside-in tracking system. Even though the tracking accuracy we can reach with the tracking camera placed relatively far away from the HMD is limited, we achieve a very good alignment between the virtual cube and real cardboard box, even with varying distances from the object.

This shows that using a consumer grade HMD like the Meta Quest 3 with a cost-efficient outside-in tracking solution a state-of-the-art mixed reality setup can be achieved. The fact that the Quest 3 is wirelessly connected to the rendering server adds to the ease-of-use of this solution.

The overlay accuracy of this solution is superior to all other solutions on the market that we’ve tried. Marker-based outside-in tracking guarantees long-term accuracy and repeatability, which is usually an inssue with inside-out or Lighthouse-based tracking. This functionality is supported from LPVR-AIR version 3.3.

Controller and Optical Marker Tracking

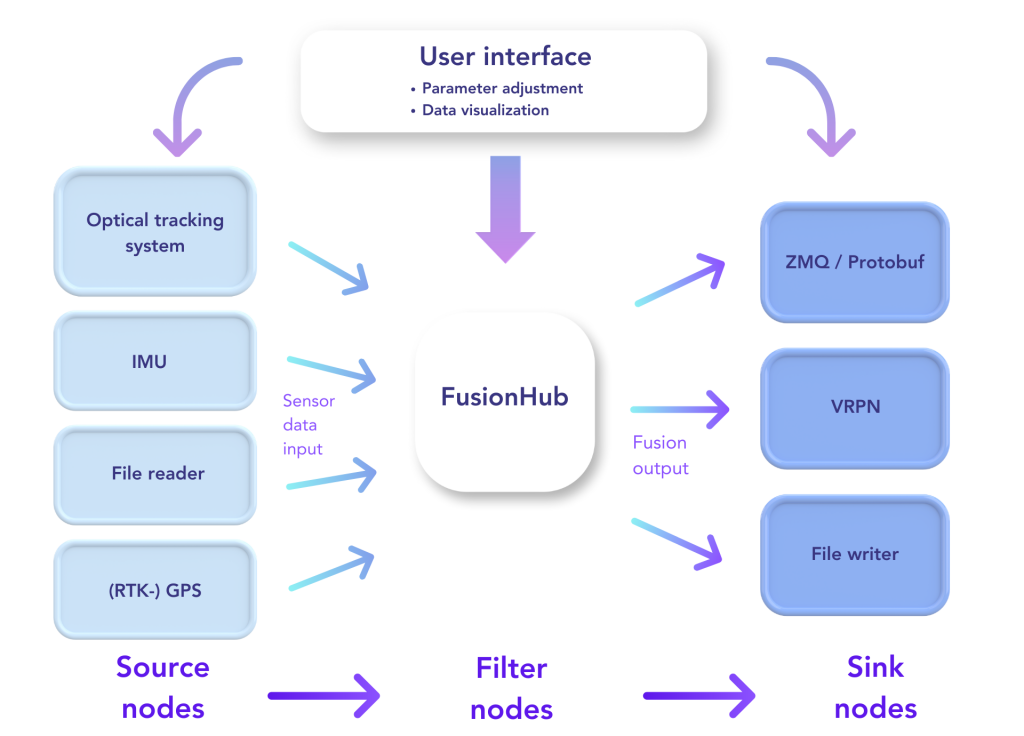

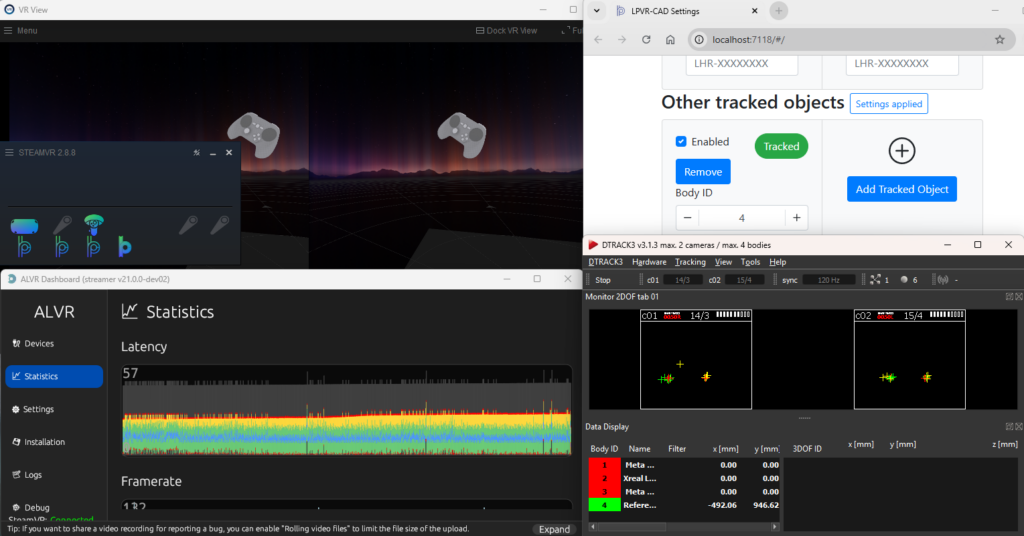

In addition to delivering high-quality mixed reality and precise wireless headset tracking, LPVR-AIR seamlessly integrates controllers tracked by the HMD’s inside-out system with objects tracked via optical targets in the outside-in tracking frame, all within a unified global frame. The video above shows this unique capability in action.

When combined with our LPVR-CAD software, LPVR-AIR enables the tracking of any number of rigid bodies within the outside-in tracking volume. This provides an intuitive solution for tracking objects such as vehicle doors, steering wheels, or other cockpit components. Outside-in optical markers are lightweight, cost-effective, and require no power supply. With camera-based outside-in tracking, all objects within the tracking volume remain continuously tracked, regardless of whether the user is looking at them. They can be positioned with millimeter accuracy and function reliably under any lighting conditions, from bright daylight to dark studio environments.

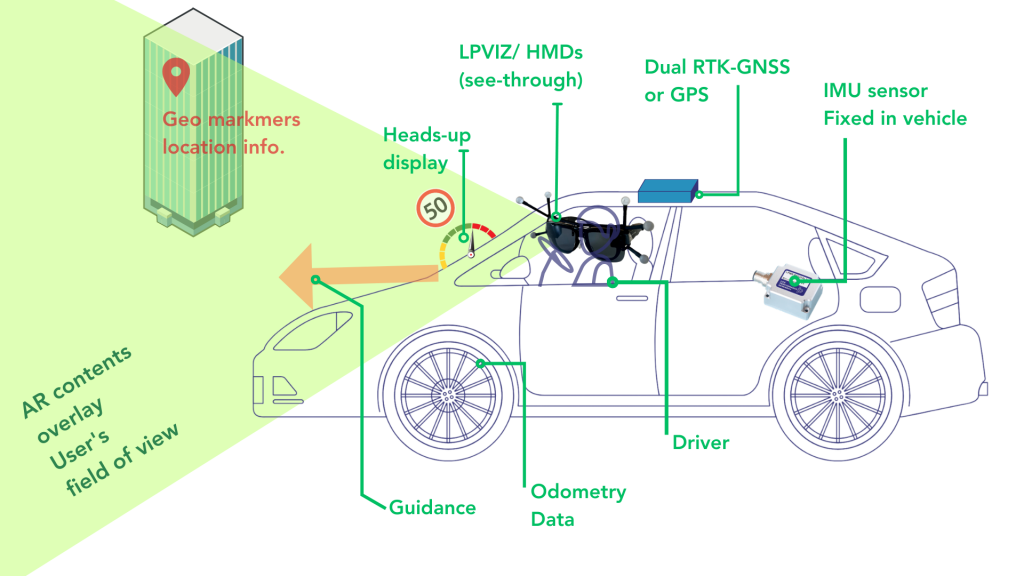

In-Car Head Tracking with LPVR-AIR

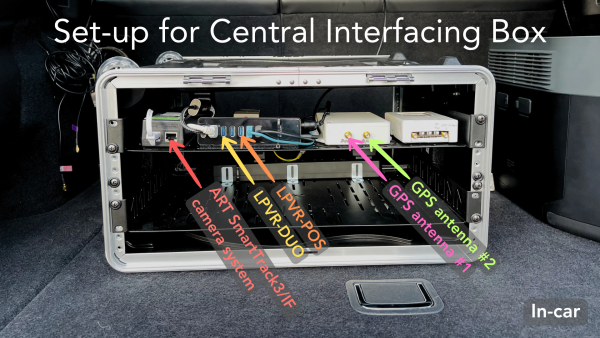

After confirming the capability of LPVR-AIR to work well with large room scale mixed reality setups, we started developing the system’s functionality to do accurate head tracking in a moving vehicle or on a simulator motion platform. For this purpose we ported the algorithm used by our LPVR-DUO solution to LPVR-AIR. With some adjustments we were able to reach a very similar performance to LPVR-DUO, this time with a wireless setup.

Whereas the video pass-through quality of the Quest and Varjo HMDs are comparable in day and night-time scenarios, the lightness and comfort of a wireless solution is a big advantage. Compatibility with all OpenVR or OpenXR compatible applications on the rendering server makes this solution a unique state-of-the art simulation and prototyping tool for autmotive and aerospace product development.

Release notes

See the release notes for LPVR-AIR 3.3 here.