LPVR New Release 4.9.2 – Varjo XR-4 Controller Integration and Key Improvements

New release LPVR-CAD and LPVR-DUO 4.9.2

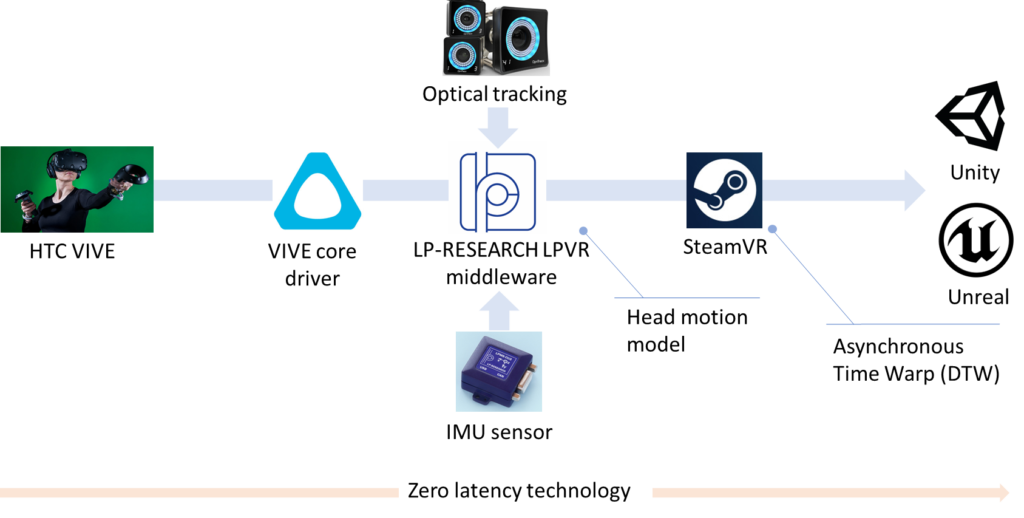

As it is with software, our LPVR-CAD and LPVR-DUO products for high-fidelity VR and AR need maintenance updates. Keeping up-to-date with the wide range of supported hardware as well as fixing issues that are discovered necessitates a release every now and then. Our latest release, LPVR-CAD 4.9.2 and LPVR-DUO 4.9.2 is no different. This blog post summarizes the changes in the latest version, LPVR-CAD 4.9.2 and LPVR-DUO-4.9.2.

Support for Varjo XR-4 Controllers

The feature with the highest visibility is support for the hand controllers that Varjo ships with the Varjo XR-4 headset. These controllers are tracked by the headset itself, and Varjo Base 4.4 adds an opt-in way of supporting them with LPVR-CAD. Varjo does not enable the controllers by default because the increased USB traffic can negatively affect performance on some systems, and so an LPVR user has to decide whether the added support is worth it on their system. Of course, we also continue supporting the SteamVR controllers together with LPVR-CAD. We detailed their use with the XR-4 in our documentation.

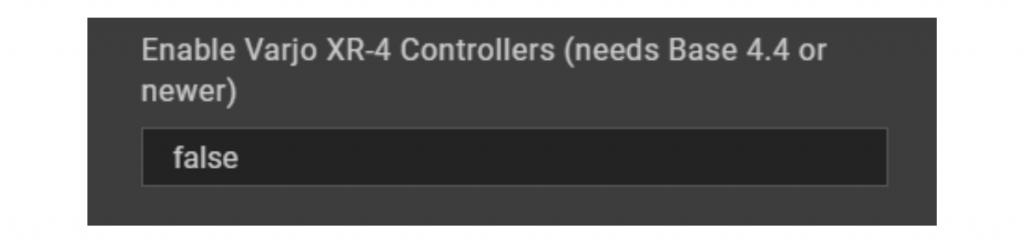

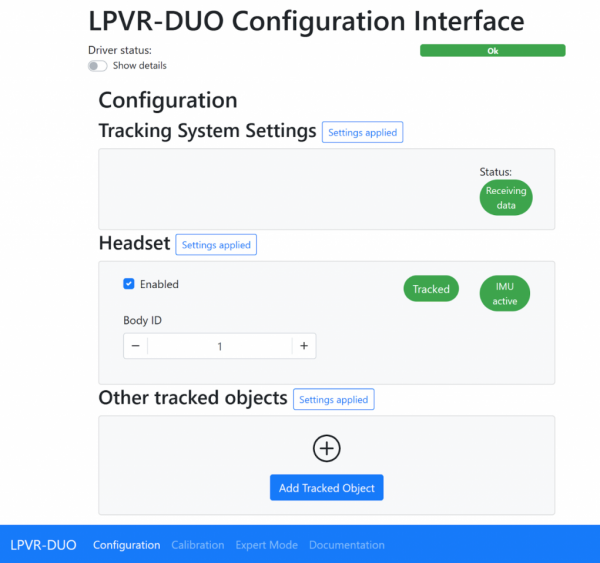

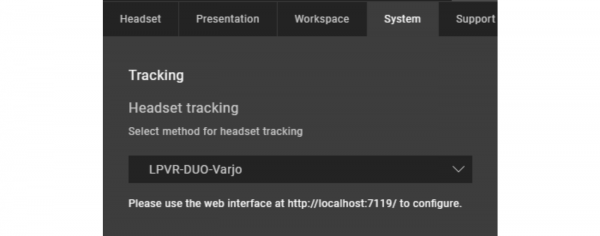

To enable the Varjo controllers in LPVR-CAD, first open Varjo Base. Then navigate to the System tab in Varjo Base. When LPVR-CAD is configured you will find a new input field, depicted below.

Setting its value to “true” will enable controller support, and “false” will disable it. After changing the value, scroll down to the Submit button and click it to effect the change. Varjo also recommends restarting Base after making this change.

Please note that this input is handled by Varjo Base itself, and so this button will also appear in older versions of Varjo Base, for reasons that are too broad to go into here. Providing this support quickly had higher priority to Varjo and us than polish. One issue that can cause confusion is that the Varjo Home screen will not display the controllers, at least in Varjo Base 4.4.0. Unity applications will have to be updated to a recent version of the Varjo plugin. Varjo is working on improving these issues.

Updated Support for JVC HMD-VS1W

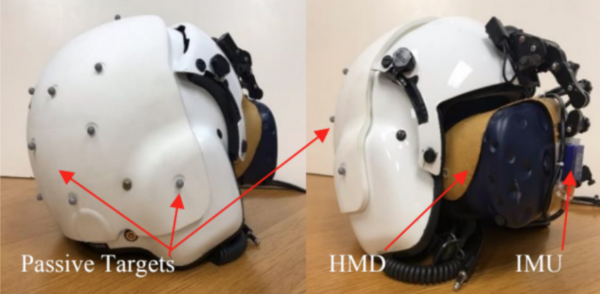

An interesting AR headset of see-through type is JVC’s HMD-VS1W. It is a niche product which is typically used in the aeronautical sector. This is a headset which uses Valve tracking with a few custom twists. With a recent software update on their side (version 1.5.0) compatibility with LPVR was broken, but it was easy enough to restore and we have recovered full compatibility.

Various other changes

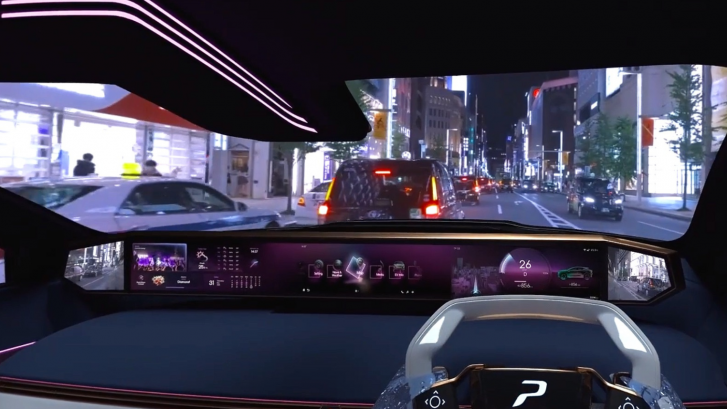

One of the key points when creating an immersive VR and AR experience is that the motion should appear as smooth as possible. We are therefore constantly refining our algorithms to meet that goal. This release significantly improves the smoothness of rotations, especially for Varjo’s third-generation headsets such as the Varjo Aero and the Varjo XR-3.

We fixed a condition where under some circumstances LPVR-DUO would crash after calibrating the platform IMU. This was related to a multi-threading issue which caused a so-called deadlock in the driver.

We also added support for a global configuration of our SteamVR driver which can be overridden by local users. Since automatic support for this requires major changes to our installers and uninstallers, we decided to postpone enabling this feature by default. Please get in touch if that is something you want to use already.

We often recommended the so-called “freeGravity” feature to our users to improve visual performance in most circumstances. We changed the default for this setting to match the needs for the most common use cases.