What is In-Vehicle AR

This article describes our first steps in the development of an AR HMD for in-car, aerospace and naval applications.

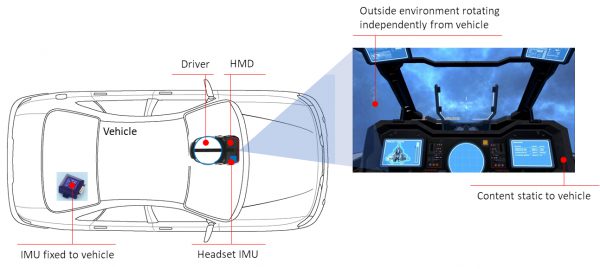

Over several years we have developed our LPVR middleware. In the first version the purpose of this middleware was to enable location-based VR with a combination of optical and IMU-based headset tracking. Building on this foundation we extended the system to work as a tracking solution for transportation platforms such as cars, ships or airplanes (Figure 1).

In contrast to stationary applications where an IMU is sufficient to track the rotations of an HMD, in the in-vehicle use-case, an additional IMU needs to be fixed to the vehicle and the information from this sensor needs to become part of the sensor fusion. We realized this with our LPVR-DUO tracking system.

Applying this middleware to existing augmented reality headsets on the market turned out to be challenging. Most AR HMDs use their own proprietary tracking technology that is only suitable for stationary use-cases, but doesn’t work in moving vehicles. Accessing such a tracking pipeline in order to extend it with our sensor fusion is usually not possible.

Figure 1 – Principle of in-car AR/VR as implemented with LPVR-DUO

Applications

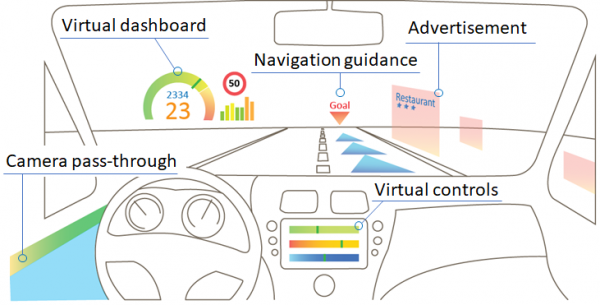

There are a large number of applications for in-car augmented reality ranging from B2B use-cases for design and development to consumer-facing scenarios. A few are listed in the illustration below (Figure 2).

Figure 2 – In-car AR use cases range from a simple virtual dashboard to interactive e-commerce applications. The “camera pass-through” enables the driver to virtually look through the car to see objects otherwise occluded by the car chassis.

HMD Specifications

For this reason, we decided to start the development of LPVIZ, an AR HMD dedicated to in-vehicle applications. This AR HMD for in-car, aerospace and naval applications is to represent the requirements of our customers as closely as possible:

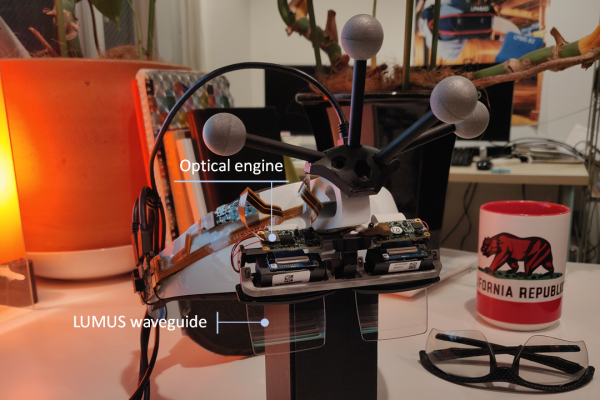

- Strong optical engine with good FOV (LUMUS waveguides), unobstructed lateral vision (safety), low persistence and high refresh rate

- System satisfies all requirements for immersive AR head tracking (pose prediction, head motion model, late latching, asynchronous timewarp etc.)

- HMD is thethered to computing unit in vehicle by a thin VirtualLink cable

- Computing unit is compact, but powerful enough to run SteamVR and thus supports a large range of software applications

- Options to use either outside-in or inside-out optical tracking inside the vehicle, as well as LeapMotion hand tracking

In-Car HMD Hardware Prototype Development

We have recently created the first prototype of LPVIZ, with hardware development still in a very early stage, but enough to demonstrate our core functionality and use-case well.

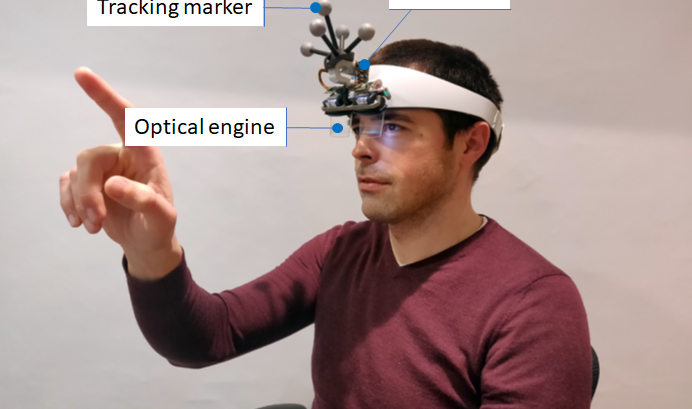

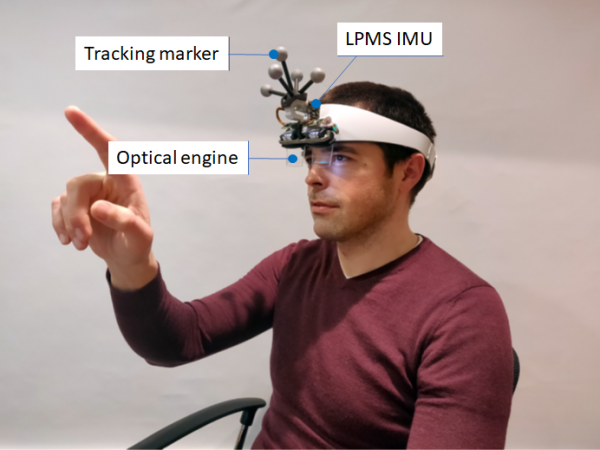

Figure 3 – Tracking of LPVIZ works based on our LPVR-DUO technology making use of ART outside-in tracking and our LPMS-CURS2 IMU module. This image shows Dr. Thomas Hauth performing an optical-see-through (OST) calibration.

Figure 4 – The LPVIZ prototype is powered by a LUMUS optical engine. This waveguide-based technology has excellent optical characteristics, perfectly suitable for our use-case.

Work in Progress

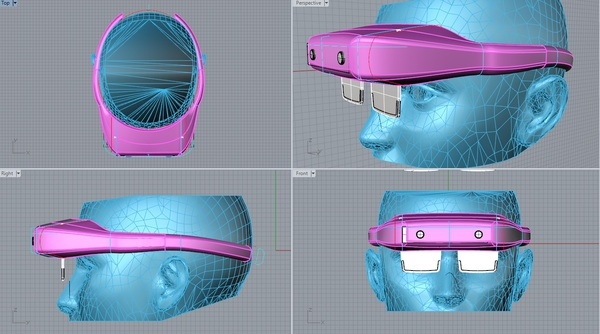

As you can see from the prototype images, our hardware system is still very much in an alpha stadium. Nevertheless we think it shows the capabilities of our technology very well and points in the right direction. In the next hardware version that will already be close to a release model, we will reduce the size of the device, applying the points below:

- Use active marker LEDs instead of large passive marker balls OR inside-out tracking

- Collect all electronics components on one compact electronics board, with only one VirtualLink connector

- Create a compact housing, with a glasses-like fixture instead of a VR-style ring mount (Figure 5)

Figure 5 – First draft of a CAD design for the housing of the LPVIZ release version