Revolutionizing AD/ADAS Testing: VR-Enhanced Vehicle-in-the-Loop

The automotive industry is in a race to develop smarter, safer, and more efficient vehicles. To meet these demands, engineers rely on sophisticated development processes. At LP-RESEARCH, we’re committed to creating tools that shape the future of mobility. That’s why we’re excited to announce a groundbreaking collaboration with IPG Automotive.

By integrating our advanced hardware and software with IPG Automotive’s CarMaker, we’ve created an immersive Virtual Reality (VR) experience for Vehicle-in-the-Loop (ViL) testing. This powerful solution allows engineers to test Autonomous Driving and Advanced Driver-Assistance Systems (AD/ADAS) on a proving ground with unprecedented realism and efficiency.

What is Vehicle-in-the-Loop (ViL)?

Vehicle-in-the-Loop is a powerful testing method that blends real-world driving with virtual simulation. An AD/ADAS-equipped vehicle drives on a physical test track while interacting with a dynamic virtual environment in real time.

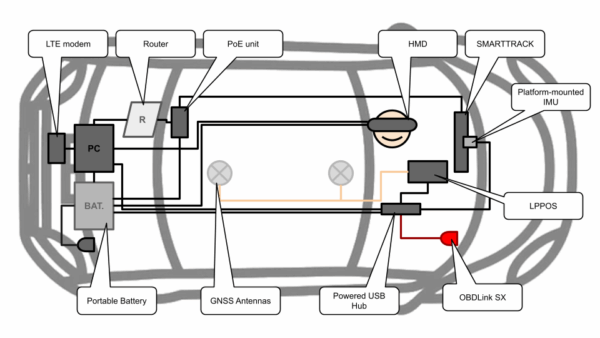

This approach lets engineers observe the vehicle’s response to countless simulated scenarios under controlled, repeatable conditions. The vehicle’s real-world dynamics are continuously fed back into the simulation, ensuring the virtual world perfectly mirrors the physical state of the car. The test vehicle is outfitted with a seamless integration of hardware and software to support this constant flow of data.

The Technology Stack: A Powerful Combination

Our collaboration combines best-in-class hardware and software from both LP-RESEARCH and IPG Automotive to deliver a complete ViL solution.

LP-RESEARCH Stack

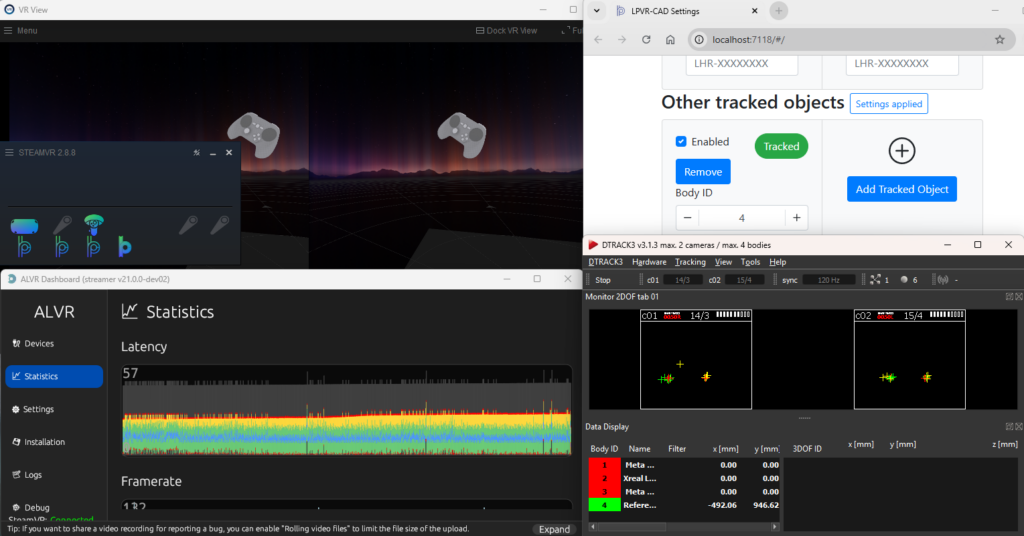

LPPOS: Our hardware system for acquiring physical data from the vehicle via ELM327 or OBDLink, completed by Global Navigation Satellite System (GNSS) antennas, and advanced Inertial Measurement Units (IMUs). LPPOS includes FusionHub, our sensor-fusion software for high-precision, real-time vehicle state estimation. More information here

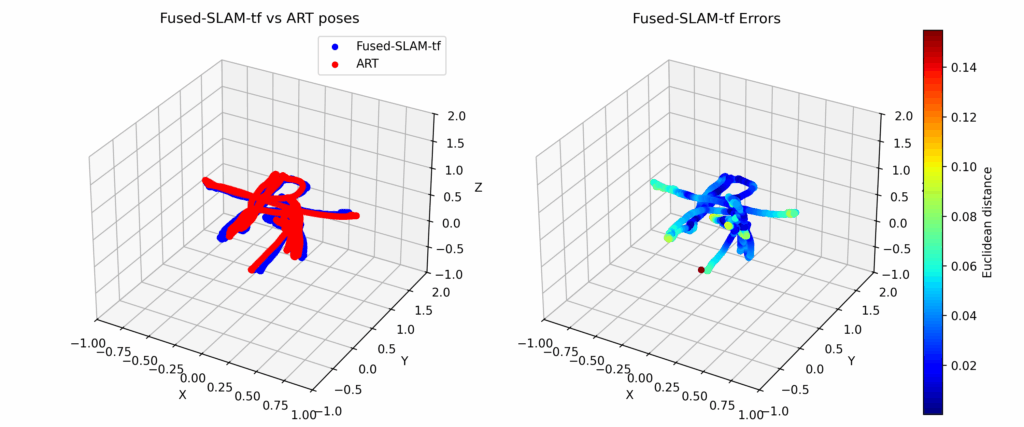

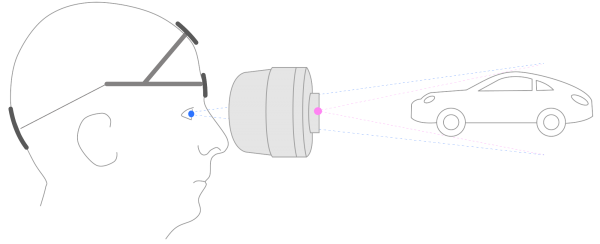

LPVR-DUO: Specialized sensor-fusion software that calculates the Head-Mounted Display (HMD) pose relative to the moving vehicle. More information here

ART SMARTTRACK3: An advanced Infrared (IR) camera tracking system from our partner, Advance Realtime Tracking (ART), for precise head tracking.

IPG Automotive Stack

CarMaker Office: The simulation environment, enabled with the ViL add-on.

Movie NX: The visualization tool, enhanced with the VR add-on to create the immersive experience.

How It All Works Together

The key to this integration is a custom plugin that connects out FusionHub software with CarMaker. This plugin translates the real vehicle’s precise position and orientation (it’s “pose“) into the virtual environment.

The system workflow is a seamless loop of data capture, processing, and visualization:

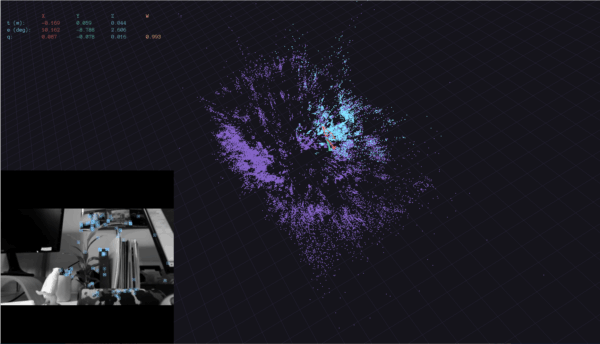

Data Acquisition: LPPOS gathers vehicle data (OBD), GNSS, and IMU measurements and sends it to FusionHub. The SMARTRACK system monitors the HMD’s position, while IMUs on the headset and vehicle platform send orientation data to LPVR-DUO.

Sensor Fusion: FusionHub processes its inputs to calculate the vehicle’s exact pose in the real world. LPVR-DUO calculates the HMD’s pose relative to the moving vehicle’s interior.

Real-Time Communication: FusionHub streams the vehicle’s pose to a dedicated TCP server, which feeds the data directly into the CarMaker simulation via our custom plugin. LPVR-DUO communicates the headset’s pose to Movie NX using OpenVR, allowing the driver or engineer to naturally look around the virtual scene from inside the real car.

The entire LP-RESEARCH software stack and CarMaker Office run concurrently on a single computer inside the test vehicle, creating a compact and powerful setup.

See It in Action

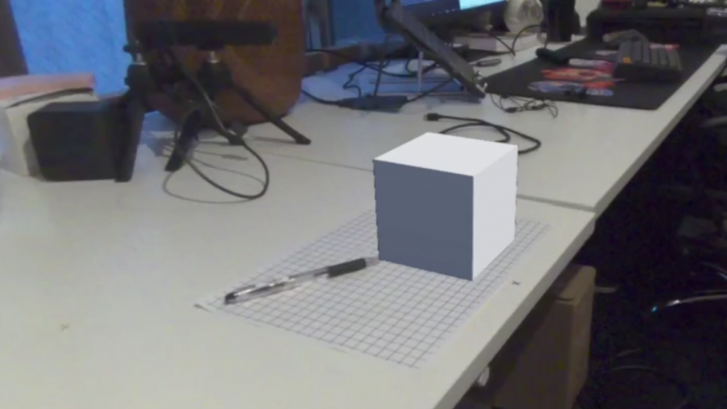

We configured a scene in the CarMaker Scenario Editor that meticulously replicates our test track near the LP-RESEARCH Tokyo Office. The video on the top of this post demonstrates the fully integrated system, showcasing how the vehicle’s real-world position perfectly matches its virtual counterpart. Notice how the VR perspective shifts smoothly as the copilot moves their head inside the vehicle.

This setup vividly illustrates how VR technology makes ViL testing more immersive, effective, and even fun.

Advance Your ViL Testing Today

Are you ready to integrate cutting-edge virtual reality into your Vehicle-in-the-Loop testing and help shape the future of mobility?

Fine-tuning the HMD view and virtual vehicle reference frame is crucial for an accurate simulation and depends on the specific test vehicle and scenario. Our team has the expertise to configure these parameters for you or provide expert guidance to ensure a perfect setup.

Contact us today to learn how our tailored solutions and expert support can elevate your AD/ADAS development process.