How to Use LPMS IMUs with LabView

Introduction

LabView by National Instruments (NI) is one of the most popular multi-purpose solutions for measurement and data acquisition tasks. A wide range of hardware components can be connected to a central control application running on a PC. This application contains a full graphical programming language that allows the creation of so called virtual instruments (VI).

Data can be acquired inside a LabView application via a variety of communication interfaces, such as Bluetooth, serial port etc. A LabView driver that can communicate with our LPMS units has been a frequently requested feature from our customers for some time, so that we decided to create this short example to give a general guideline.

A Simple Example

The example shown here specifically works with LPMS-B2, but it is easily customizable to work with other sensors in our product line-up. In order to communicate with LPMS-B2 we use LabView’s built-in Bluetooth access modules. We then parse the incoming data stream to display the measured values.

The source code repository for this example is here.

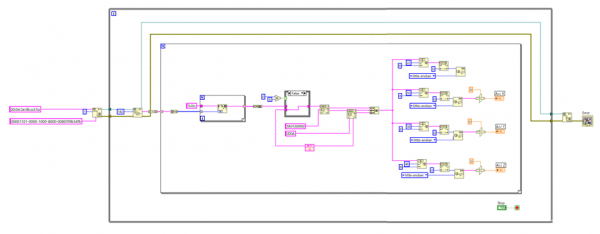

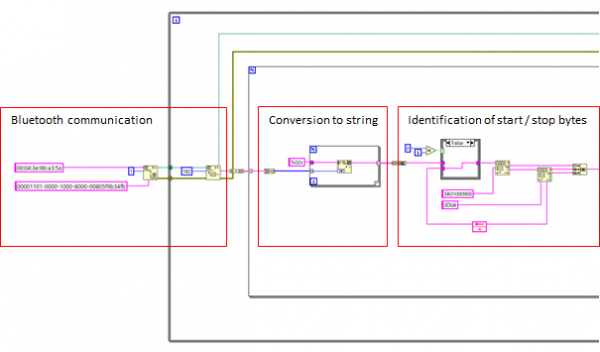

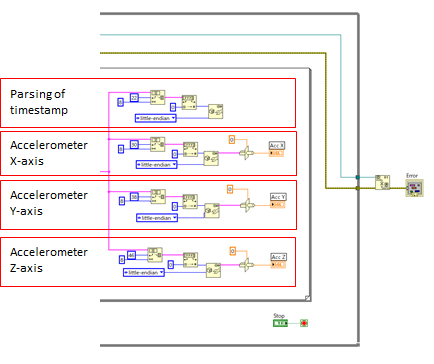

Figure 1 – Overview of a minimal virtual instrument (VI) to acquire data from LPMS-B2

Fig. 1 shows an overview of the example design to acquire the accelerometer X, Y, Z axes of the IMU and displays them on a simple front panel. Fig. 2 & 3 below show the virtual instrument in more detail. After reading out the raw data stream from the Bluetooth interface, this data stream is converted into a string. The string is then evaluated to find the start and stop character sequence. The actual data is finally extracted depending on its position in the data packet.

Figure 2 – Bluetooth access and initial data parsing

Figure 3 – Extraction of timestamp, accelerometer X, Y, Z values

Notes

Please note that the example requires manually entering the Bluetooth ID of the LPMS-B2 in use. The configuration of the data parsing is static. Therefore the output data of the sensor needs to be configured and saved to sensor flash memory in the LPMS-Control application. For reference please check the LPMS manual.

An initial version of this virtual instrument was kindly provided to us by Dr. Patrick Esser, head of the Movement Science Group at Oxford Brooks University, UK.