Introducing LP-Research’s SLAM System with Full Fusion for Next-Gen AR/VR Tracking

At LP-Research, we have been pushing the boundaries of spatial tracking with our latest developments in Visual SLAM (Simultaneous Localization and Mapping) and sensor fusion technologies. Our new SLAM system, combined with what we call “Full Fusion,” is designed to deliver highly stable and accurate 6DoF tracking for robotics, augmented and virtual reality applications.

System Setup

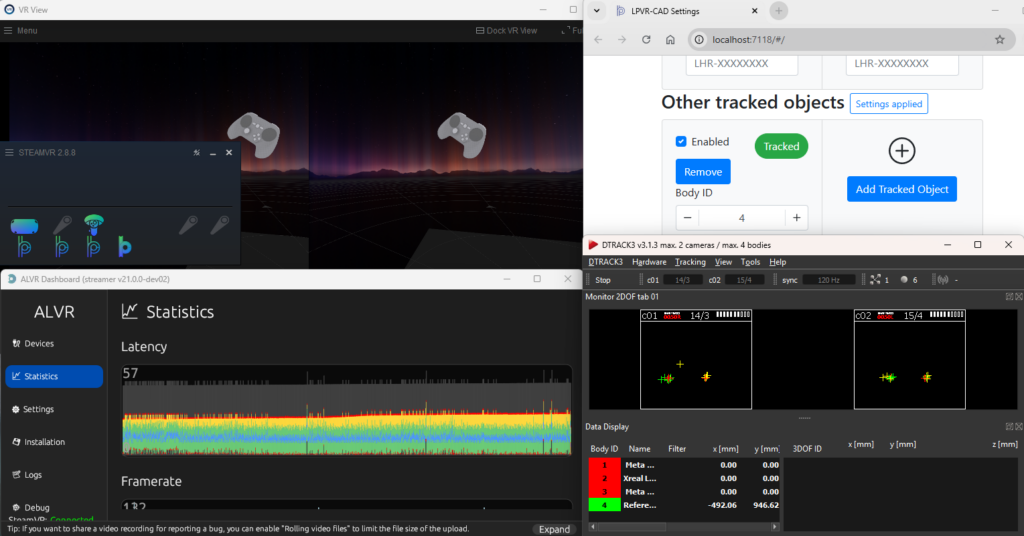

To demonstrate the progress of our development, we ran LPSLAM together with FusionHub on a host computer and forwarded the resulting pose to a Meta Quest 3 mixed reality headset for visualization using LPVR-AIR. We created a custom 3D-printed mount to affix the sensors needed for SLAM and Full Fusion, a ZED Mini stereo camera and an LPMS-CURS3 IMU sensor onto a the headset.

This mount ensures proper alignment of the sensor and camera with respect to the headset’s optical axis, which is critical for accurate fusion results. The system connects via USB and runs on a host PC that communicates wirelessly with the HMD. An image of how IMU and camera are attached to the HMD is shown below.

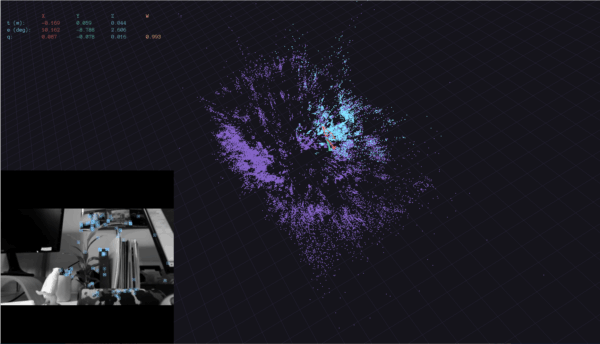

In the current state of our developments we ran tests in our laboratory. The images below show a photo of the environment next to how this environment translates into an LPSLAM map.

Tracking Across Larger Areas

A walk through the office based on a pre-built map yields good results. The fusion in this experiment is our regular IMU-optical fusion and therefore doesn’t support translation information with integrating accelerometer data. This leads to short interruptions of position tracking in certain areas where feature points aren’t found. We at least partially solve this problem with the full fusion shown in the next paragraph.

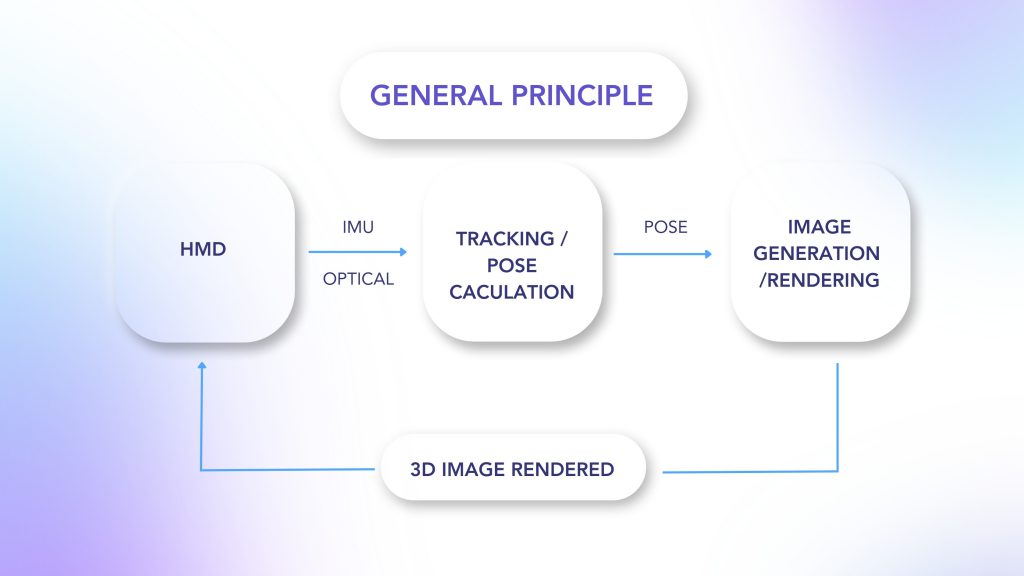

What is Full Fusion?

Traditional tracking systems rely either on Visual SLAM or IMU (Inertial Measurement Unit) data, often with one compensating for the other. Our Full Fusion approach goes beyond orientation fusion and integrates both IMU and SLAM data to estimate not just orientation but also position. This combination provides smoother, more stable tracking even in complex, dynamic environments where traditional methods tend to struggle.

By fusing IMU velocity estimates with visual SLAM pose data through a through a specialized filter algorithm, our system handles rapid movements gracefully and removes jitter seen in pure SLAM-only tracking. The IMU handles fast short-term movements while SLAM ensures long-term positional stability. Our latest releases even support alignment using fiducial markers, allowing the virtual scene to anchor precisely to the real world. The video below shows the SLAM in conjunction with the Full Fusion.

Real-World Testing and Iteration

We’ve extensively tested this system in both lab conditions and challenging real-world environments. Our recent experiments demonstrated excellent results. By integrating our LPMS IMU sensor and running our software pipeline (LPSLAM and FusionHub), we achieved room-scale tracking with sub-centimeter accuracy and rotation errors as low as 0.45 degrees.

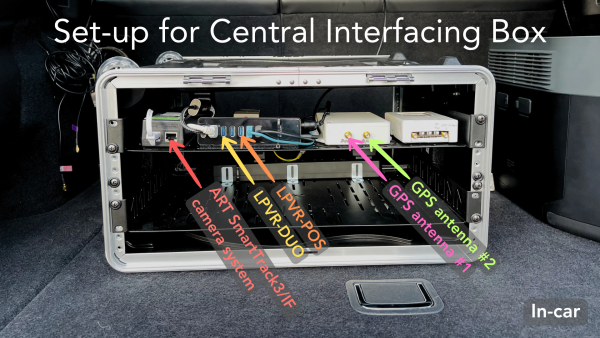

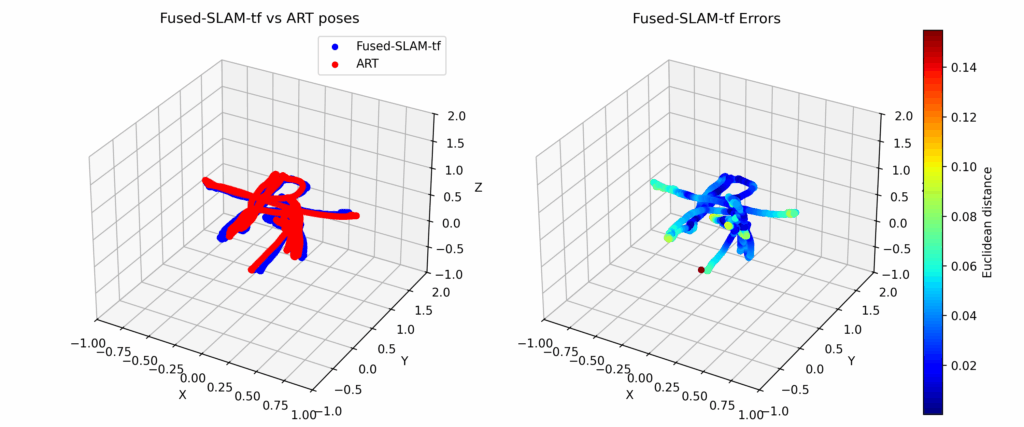

In order to evaluate the performance of the overall solution we compared the output from FusionHub with pose data recorded by an ART Smarttrack 3 tracking system. The accuracy of an ART tracking system is in the sub-mm range and therefore is sufficienty accurate to characterize the performance of our SLAM. The result of one of several measurement runs is shown in the image below. Note that both systems were alignment and timestamp synchronized to correctly compare poses.

Developer-Friendly and Cross-Platform

The LP-Research SLAM and FusionHub stack is designed for flexibility. Components can run on the PC and stream results to an HMD wirelessly, enabling rapid development and iteration. The system supports OpenXR-compatible headsets and has been tested with Meta Quest 3, Varjo XR-3, and more. Developers can also log and replay sessions for detailed tuning and offline debugging.

Looking Ahead

Our roadmap includes support for optical flow integration to improve SLAM stability further, expanded hardware compatibility, and refined UI tools for better calibration and monitoring. We’re also continuing our efforts to improve automated calibration and simplify the configuration process.

This is just the beginning. If you’re building advanced AR/VR systems and need precise, low-latency tracking that works in the real world, LP-Research’s Full Fusion system is ready to support your journey.

To learn more or get involved in our beta program, reach out to us.