Design of an Efficient CAN-Bus Network with LPMS-IG1

Introduction to Designing an Efficient CAN-Bus Network

This article describes how to design an efficient high speed CAN-bus network with LPMS-IG1. We offer several sensor types with a CAN bus connection. The CAN bus is a popular network standard for applications like automotive, aerospace and industrial automation where connecting a large number of sensor and actuation units with a limited amount of cabling is required.

While creating a CAN bus network is not difficult by itself, there are a few key aspects that an engineer should follow in order to achieve optimum performance.

Efficient CAN-Bus Network Topology

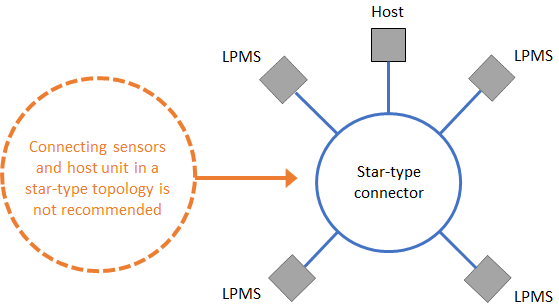

A common mistake when designing a CAN bus network is to use a star topology to connect devices to each other. In this topology the signal from each device is routed to a center piece by connections of similar length. The center piece is connected to the host to acquire and distribute data to the devices of the network.

For reaching the full performance of a CAN bus network, we strongly discourage using this topology. Most CAN bus setups designed in this way will fail to work reliably and at high speed.

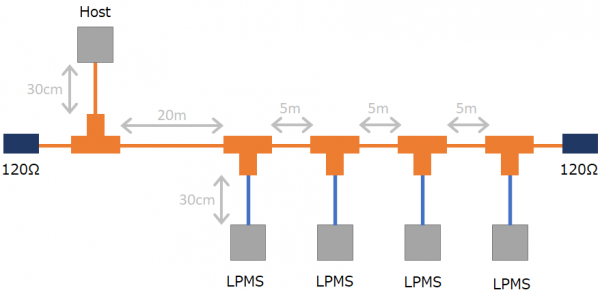

The CAN bus standard’s fundamental concept is to work best in a daisy chain configuration, with one sensor unit or the data acquisition host being the first device in the chain and one device being the last in the network.

Maximum CAN-Bus Speed and Cable Length

A key aspect for the design of an efficient high speed CAN-bus network is to correctly adjust bus cable lengths. The bus line running past each device is to be the longest connection in the network. Each sensor needs to be connected to the bus by a short stub connection. A typical length for such a stub connection is 10-30cm, whereas the main bus line can have a length of hundreds of meters, depending on the desired transmission speed.

| Speed in bit/s | Maximum Cable Length |

|---|---|

| 1 Mbit/s | 20 m |

| 800 kbit/s | 40 m |

| 500 kbit/s | 100 m |

| 250 kbit/s | 250 m |

| 125 kbit/s | 500 m |

Note that a CAN bus network needs to be terminated using a 120 Ohm resistor at each end. This is especially important for bus length of more than 1-2m and should be considered as general good practice.

LPMS-IG1 CAN-Bus Configuration

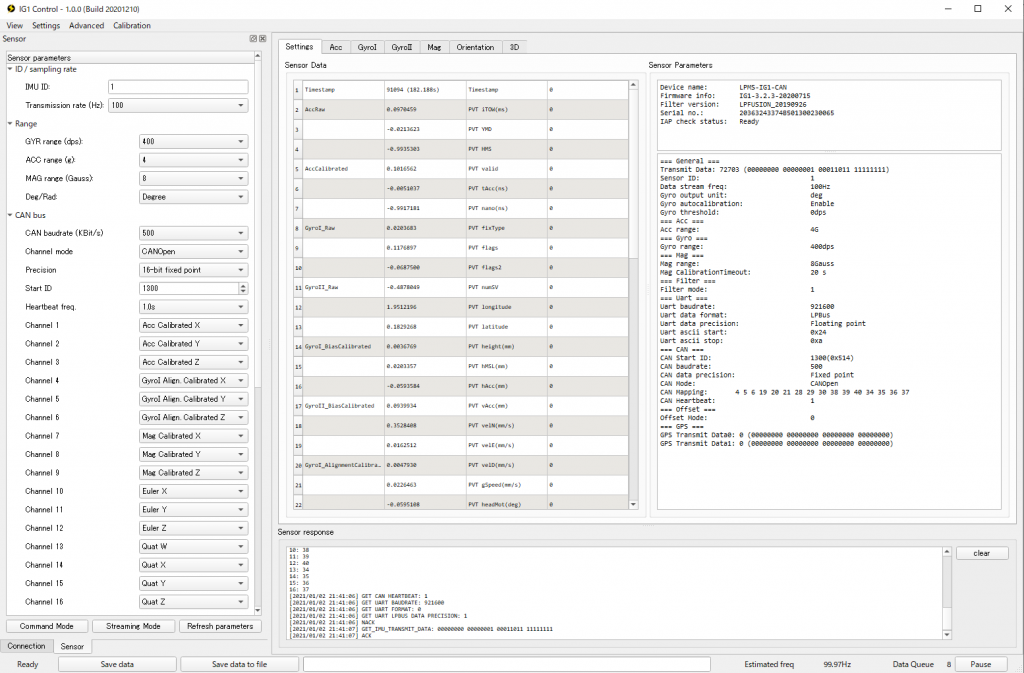

One of our products with a CAN bus interface option is our LPMS-IG1 high performance inertial measurement unit. LPMS-IG1 can be flexibly configured to satisfy user requirements. It has the ability to output data using the CANopen standard, freely configurable sequential streaming or our proprietary binary format LP-BUS. These and further parameters can be set via our IG1-Control data acquisition application.

Some CAN bus data loggers that rely on the CANopen standard require users to provide an EDS file to automatically configure each device on the network. While we don’t support the automatic generation of EDS files from our data acquisition applications, depending on the settings in IG1-Control or LPMS-Control, it is possible to manually create an EDS file as described in this tutorial.

In this article we give a few essential insights into how to design an efficient high speed CAN-bus network with LPMS-IG1. If you would like to know more about this topic or have any questions, let us know!