UPDATE 1: Full SteamVR platform support

UPDATE 2: Customer use case with AUDI and Lightshape

UPDATE 3: Customer use case with Bandai Namco

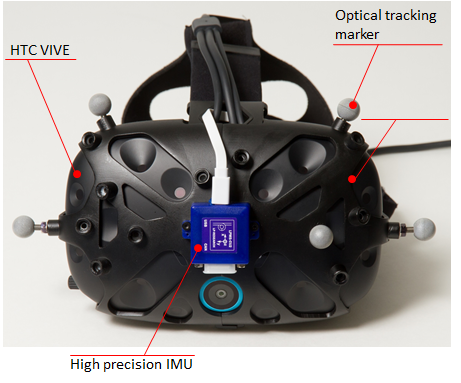

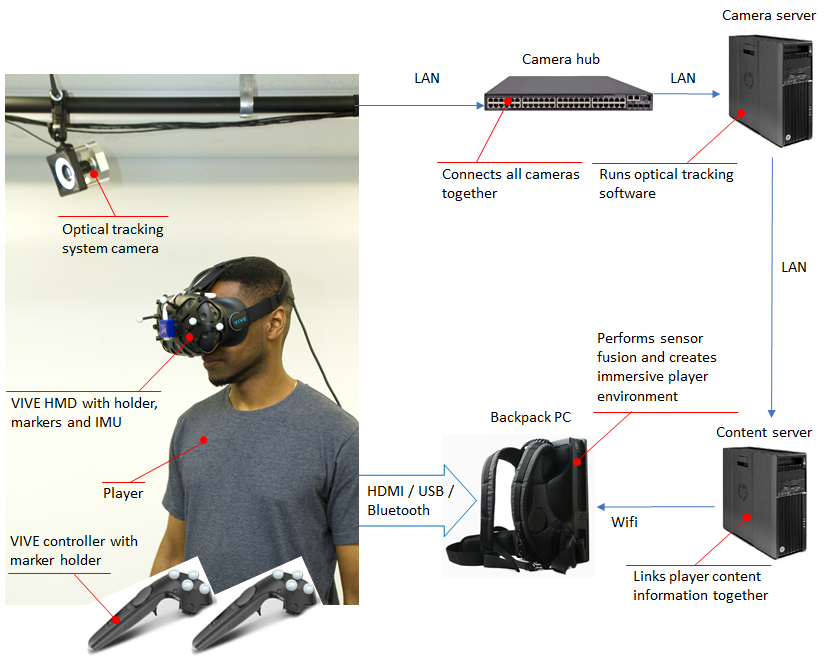

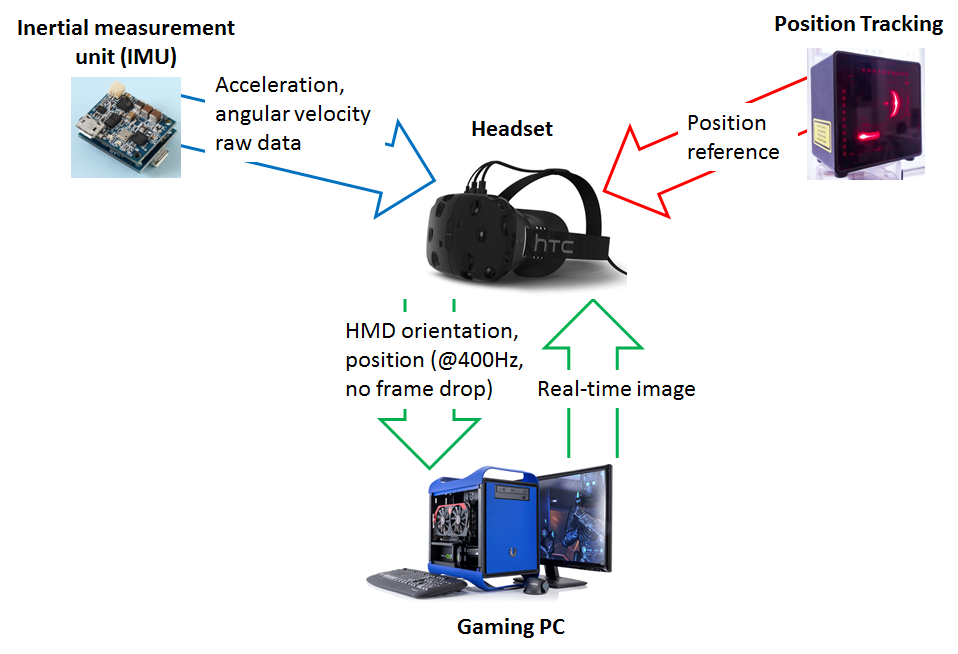

Consumer virtual reality head mounted display (HMD) systems such as the HTC VIVE support so-called room scale tracking. These systems are able to track head and controller motion of a user not only in a sitting or other stationary position, but support free, room-wide motions. The volume of this room scale tracking is limited to the capabilities of the specific system, usually covering around 5m x 5m x 3m. Whereas for single user games or applications this space may be sufficient, especially multi-user, location-based VR applications such as arcade-style game setups or enterprise applications require larger tracking volumes.

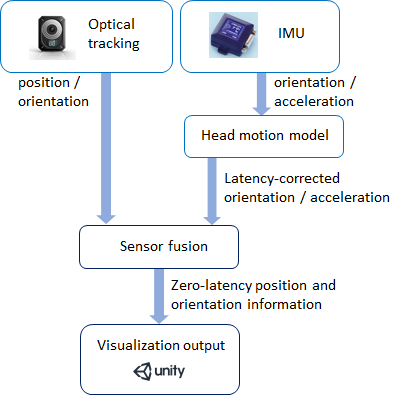

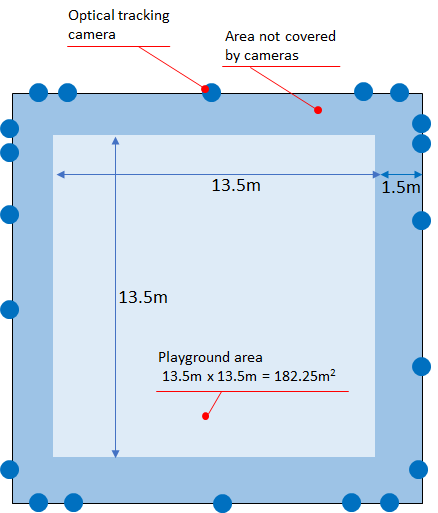

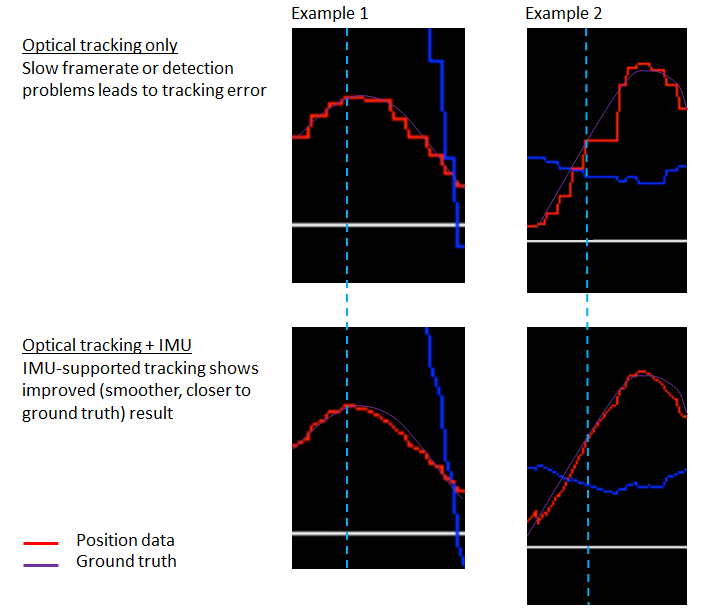

Optical tracking systems such as Optitrack offer tracking volumes of up to 15m x 15m x 3m. Although the positioning accuracy of optical tracking systems are in the sub-millimeter range, especially orientation measurement is often not sufficient to provide an immersive experience to the user. Image processing and signal routing may introduce further latencies.

Our locations-based VR / large room-scale tracking solution solves this problem by combining optical tracking information with inertial measurement data using a special predictive algorithm based on a head motion model.

| Compatible HMDs: |

HTC VIVE, HTC VIVE Pro |

| Compatible optical tracking systems: |

Optitrack, VICON, ART, Qualisys, all VRPN-compatible tracking systems |

| Compatible software: |

Unity, Unreal, Autodesk VRED, all SteamVR-compatible applications |

This location-based VR solution is now available from LP-RESEARCH. Please contact us here for more information or a price quotation.